Have you been looking for a reliable ML validation tool? Or do you need assistance in assessing the performance of your machine-learning models?

Look no further—Deepchecks might just be the tool you need!

This comprehensive overview of Deepchecks will walk you through how this ML validation tool works and how it can improve your ML workflows.

What is Deepchecks?

Deepchecks is an open-source tool machine-learning validation tool that helps you assess the performance of your machine-learning models.

This Python-based open-source package puts an emphasis on assessing models in both the research and production phases. It ensures that your models are well-calibrated and ready for deployment.

Put simply, Deepchecks helps data scientists like you quickly identify training, deployment, and production issues before they become a problem.

As a data scientist who has worked on basic machine learning based systems and models, this already sounds very exciting since it seems like it can save a lot of time and effort to check for mistakes.

Let’s have a look at what this tool can do exactly!

What Does Deepchecks Do?

Deepchecks offers features to help you evaluate and improve your machine learning models.

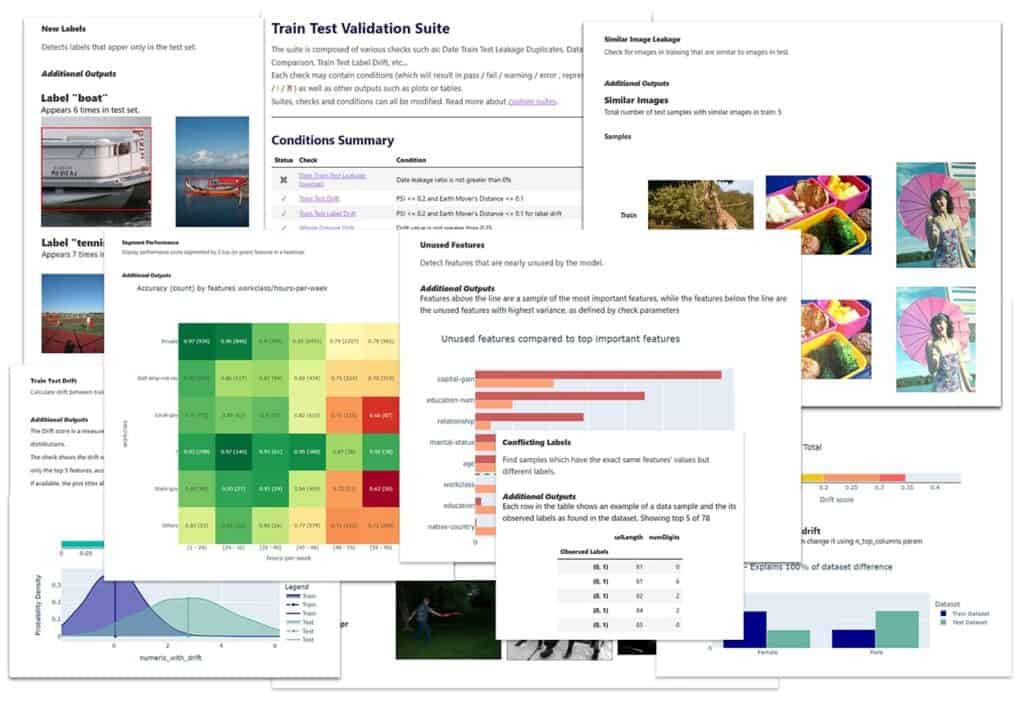

They use a combination of Deepchecks Suites (which is basically a list of Checks) and Deepchecks Checks to help you test and assess your models.

This enables you to:

- Conduct data integrity checks

- Compare data distributions between training, validation, and test datasets

- Measure model performance across multiple metrics

- Diagnose sources of error and carry out debugging with model explainers

- Validate multiple models with a range of target metrics and thresholds

These are made available in a comprehensive report output when used in the Python package.

In addition, Deepchecks comes with an intuitive visual monitoring dashboard that allows you to monitor your ML models over time and take corrective action when needed.

After building a user base for their extensive test suites (2,700+ stars and 650,000+ downloads), Deepchecks is now making another bold move: They’ve just open-sourced their ML Monitoring solution.

Deepchecks is paving the way for a collaborative evolution of ML model monitoring on their mission to enable continuous validation for AI/ML systems for all.

This comes just a week after they released support for NLP modules in their testing offering, so the potential audience is constantly growing!

Key Features

This ML validation tool offers features that can help with testing and monitoring your ML models.

1. Data Integrity Checks

As most data science practitioners already know, having high integrity of data is crucial to having a successfully trained model.

This issue is made less pressing with Deepchecks’ data integrity checks.

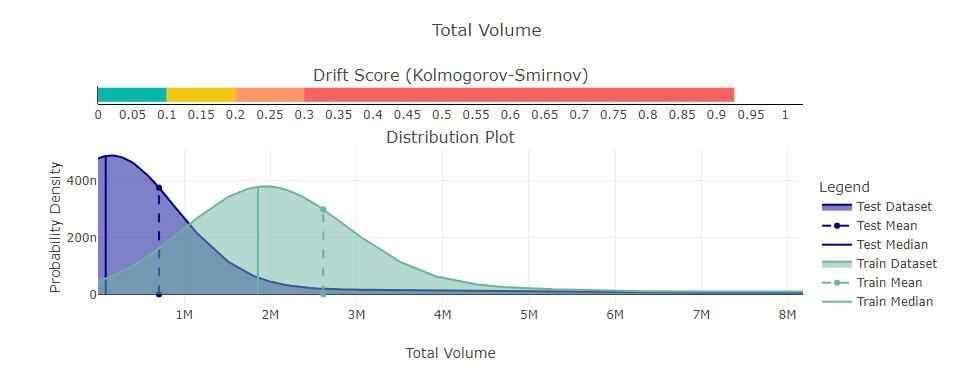

It validates numbers, identifies any corrupted or missing values or duplicated values, and verifies that the distributions between different datasets match up.

Some examples of built-in checks include:

- Conflicting labels

- Feature-feature correlation

- Feature-label correlation

- String mismatch

In my opinion, having an extra tool to watch out for data integrity issues can go a long way in boosting your ML performance metrics.

They even have a data integrity quickstart guide to get you started.

2. Train-Test Validation

After you’ve been reassured that your data is all well processed, you’ll need to do some cross-validation work.

This can be a rather simple process with basic metrics, but Deepchecks takes this one step further with its comprehensive train-test validation feature!

In addition to the usual train-test validation methods like accuracy, precision, and recall, Deepchecks can also measure the model performance across multiple metrics.

Here are some examples:

- Feature drift

- Data leakage

- New label

- Multivariate drift

These methods are especially useful when comparing distributions and variations across training datasets.

By running these tests during every epoch of training, you’ll be able to identify which models are better suited for deployment.

3. Model Evaluation

Evaluating the performance of a model is often a repetitive but needed task.

However, Deepchecks makes it easier through its streamlined and comprehensive metrics-based ML evaluation platform.

It provides you with an assortment of metrics that can help you measure both the accuracy and robustness of your models.

Some examples of evaluation the platform provides are:

- Weak segments performance

- Unused features

- Simple model comparison

- Calibration score

Taking a comprehensive look at the performance of your models is a great way to ensure that you have a model that performs well in production. These additional evaluation methods, on top of the usual metrics, will be useful for any data practitioner.

4. ML Validation Continuity from Research to Production

Most ML validation tools focus on the research stage of development.

While this is important to ensure that your model works as expected, it’s also essential to be able to carry out validation in production.

This is where Deepchecks really stands out since it offers features that help with monitoring models in production.

Their platform makes it easy to use the exact subsets used in research to be used for CI/CD and production.

5. Code-Level Root Cause Analysis

The last feature I wanted to mention is the ability of Deepchecks to help you with code-level root cause analysis.

Through segmenting your data, Deepchecks can help you find out where your errors have gone wrong faster.

This can be especially useful when debugging as it allows you to pinpoint the exact subsets of data that cause problems.

Deepchecks Products

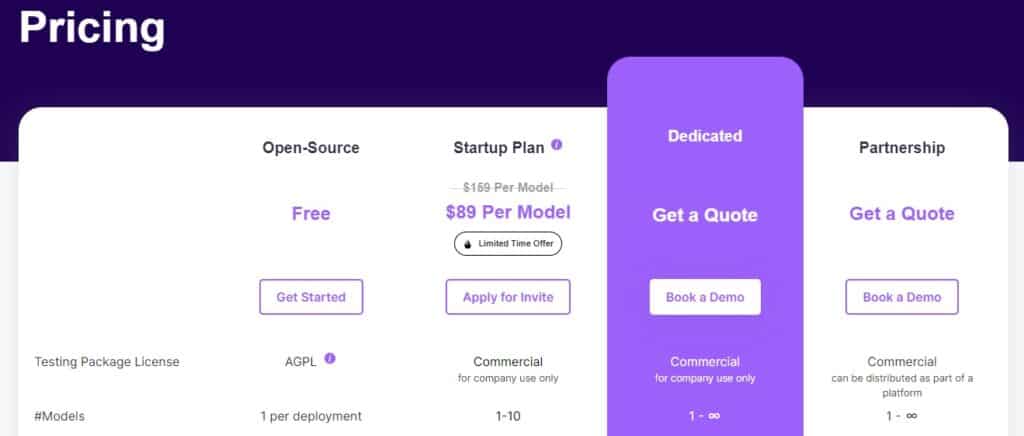

Deepchecks offers several products and features according to the plan you choose. Although the Deepchecks package is open-source, they do offer more advanced plans if you require more production models.

Deepchecks is available in four versions: the Open-Source Plan, Startup Plan, Dedicated Plan, and Partnership Plan.

1. Deepchecks Open-Source

The open-source model allows you to install, configure, and use Deepchecks as a standalone Python library.

This free version allows you to have 1 model per deployment, all the testing and monitoring features, and generate reports for model evaluation within your Python environment.

Do take note that the model deployments will be on-prem on your machine since it runs locally.

This option would be the best for most ML practitioners who need comprehensive reporting on their ML models throughout the testing and monitoring phases.

However, the open-source version is slightly lacking in customer support, which is understandable.

2. Deepchecks Startup Plan

The enterprise version offers additional features such as custom metrics, automated data checks, and secure user authentication via an API key.

This paid plan offers more flexibility and scalability when it comes to model deployments and also allows for up to 10 models per deployment.

Do take note that the Startup Plan is meant only for company use with smaller teams building ML models in production.

3. Deepchecks Dedicated Plan

The Dedicated Plan provides the same features as the Startup version but with more advanced scalability options.

This plan provides more opportunities for your models since you’ll have an unlimited number of models per deployment.

4. Deepchecks Partnership Plan

This partnership plan is for those who intend to use Deepchecks as part of their product, where you’ll be using Deepchecks commercially.

This is for enterprises serious about integrating Deepchecks and its features into a proprietary platform.

Tool Integrations

Deepchecks work with many common tools used in ML pipelines, such as:

- Scikit-learn

- TensorFlow

- NumPy

- Databricks

- H20.ai

- Pycaret

- Weights & Biases

- Hugging Face

- Pytorch

- MLflow

- Pandas

- Pytest

- Github

- Apache Airflow

- Neptune.ai

- CircleCI

- Jenkins

This makes it easy to integrate the Deepchecks tool into your existing workflow and data pipeline.

Supported Data Types

Deepchecks support the most common data types used in training machine learning models.

1. Tabular: Handles datasets stored in popular tabular data formats like the pandas DataFrame

2. NLP: Looks at the NLP or NLU pipeline from end-to-end with trigger alerts from monitoring data integrity in raw data to spotting distribution changes and concept drift.

3. Vision: Handles training image datasets used for computer vision

Final Thoughts

As you can see, Deepchecks is a powerful tool to ensure high-quality ML models. With its comprehensive suite of validation features, you can easily monitor and evaluate your model performance in the research and production stages.

Given this, Deepchecks will be an asset for data scientists or ML engineers looking for a reliable platform for their machine learning projects.

I also like that they have made their ML production monitoring open-source as well, which is a huge help to the community!

If you’re working on complex ML projects, then Deepchecks might be the perfect tool for you.

Try it out by following the monitoring quickstart from their GitHub!